Into the Future of SEO

BY Ryan Conley

LISTEN

The rapidly increasing sophistication of search engines is changing the nature of SEO. The part of SEO that focuses on understanding how to game the search engines — without respect to improving the content or the user experience — is dying.

Google's stated mission is to “organize the world's information.” To that end, Google and other search engines want to link users to the very best and most relevant websites for any given query. The best sites, objectively, are not those managed with the best SEO practices, but those with the best content – the ones that large numbers of people regard as good sources of information.

There is an ongoing battle being fought between the search engine companies' computer programmers and some SEO strategists. Part of SEO has always been about finding “shortcuts” to better rankings with a minimum of expense and time. That means anything from blatant keyword spam to huge amounts of not-quite-useless pseudo-content. The programmers, for their part, are single-mindedly focused on improving the user experience by returning more relevant links. This means that they must teach their computers how to recognize links and content created as shortcuts and rank them much lower than information that is created to fulfill people's needs.

Here is the kicker: SEO cannot win this battle.

Panda

When search engines were a new technology, it was relatively easy to fool even the most sophisticated computers into believing that pure spam was actually relevant content. Site creators could stuff pages with keywords beyond the point of turning the text into gibberish and create or purchase low-quality links across the web, and search engines were none the wiser.

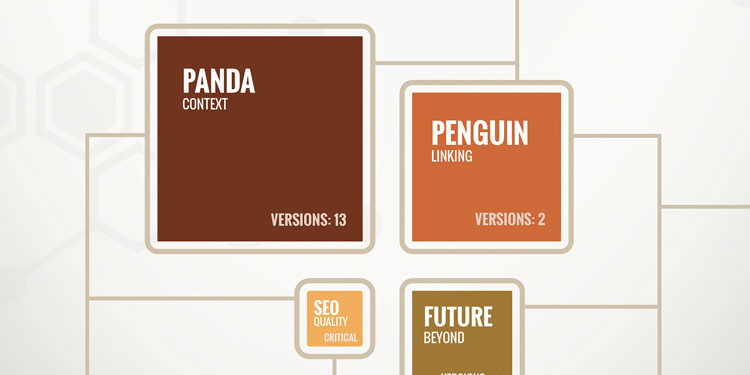

Now, however, those elementary tactics are largely useless against search engines. The sophistication of search algorithms and the processing power available to programmers is such that computers are able to “learn” what makes web content more or less valuable. In 2011, Google implemented a change to its algorithms that it called "Panda," which has since been updated 13 times. The company had recently had a breakthrough in a process called “machine learning,” and used it to teach their computers how to recognize good content. Google's human quality raters used objective and subjective criteria to rate a large number of sites, and Google's engineers then fed those sites and their respective ratings into their computers to teach them what makes web content desirable or undesirable to users.

Through machine learning, search engine computers are now able to understand the context of information – not just the words – and that is becoming an important part of how they rank pages. It used to be that a website that ranked highly for a particular search phrase would almost certainly contain that phrase, and other pages around the web would link to the site using that phrase as the anchor text. That is no longer necessarily true. Now, context alone is a huge factor in rankings.

Here is an example of context at work. The number one Google result for the phrase “backlink analysis” is a popular tool called Open Site Explorer. Backlink analysis refers to an SEO process of examining links. This is a competitive search phrase, and the competitors are SEO experts. However, the Open Site Explorer page does not overtly target that phrase by any apparent means. How, then, did it claim the top spot? The answer is context. Many sites around the web talk about Open Site Explorer when they talk about backlink analysis. These sites need not link to the tool at all in order to influence its ranking in search results.

Because search engines understand context, they are increasingly able to distinguish between content created with search engines in mind and content created with users in mind. And because the latter is far more useful to the user, it is favored in the rankings while the former is penalized.

Penguin

Another significant change to Google's algorithms, introduced in 2012 and recently updated, is called "Penguin." What Panda did for content, Penguin did for links. A site's “link profile” is the aggregate of all links on the web that point to it and their characteristics. With the Penguin updates, Google is better able to distinguish between a link profile that is the result of an SEO strategy and one that is the result of a genuine reputation as a useful site.

The most important thing to know about links post-Penguin is that your anchor text needs to be diversified. “Anchor text” is the clickable words in a web link. For SEO purposes, links are uniquely valuable when they contain anchor text made up of the particular phrases for which you want to rank well – your “money keywords.” Old-school SEO strategy says that the more links that point to your website with anchor text matching your money keywords, the higher you will rank on a search for those words. Strictly speaking, that is no longer true. There is now a point at which such links will give you rapidly diminishing returns and even become counterproductive.

Search engines want to return results that are valuable and relevant, not simply optimized. What does a link profile for a truly valuable information resource look like? Because it is made of many links created by many different individuals in widely varying contexts, the anchor text is also widely varied. It includes many instances of the domain name (yourlawfirm.com), the full URL (www.yourlawfirm.com), and the name of the business (Your Law Firm). It will also include phrases like “click here,” “this blog post,” and “my law firm.” Comparatively few of those links will have anchor text with competitive money keywords, such as “New York divorce attorney.”

If the majority of the links that point to your site have your money keywords as anchor text, search engines will infer that they are disproportionately the result of targeted SEO efforts as opposed to organic, unsolicited links. And as a result, they will rank your site lower than if your anchor text were diversified. A recent report from a well-known SEO firm showed that sites whose inbound links had competitive key phrases more than 60 percent of the time saw their rankings drop following a Penguin update. At higher rates, that drop in rankings became very steep indeed.

A natural link profile is diversified in other ways as well. It comes from sites with many different domain extensions. The vast majority of links to your firm's site are probably on .com domains. Therefore, any that come from .edu, .gov, and other domains are particularly valuable to you. Diversity of content also leads to diversity of links. For instance, if you create a video or podcast, that content is probably hosted remotely alongside links back to your site, giving you new link sources.

However, the most valuable portion of your link profile post-Penguin is outside your direct control. It comes from the greater web in the form of unsolicited links from users who use your site to fulfill a need. A great link profile therefore takes more than a little time to build. Great content may be created at an accelerated pace by committing time, effort, and money to the project, but a great link profile only comes after a site builds a reputation for delivering useful information.

The Future

The rapidly increasing sophistication of search engines is changing the nature of SEO. The part of SEO that focuses on understanding how to game the search engines – without respect to improving the content or the user experience – is dying. Instead, the industry is becoming focused on generating high-quality content and knowing where and how to organize and deliver that content. SEO firms and clients who hold onto outdated notions of what constitutes a strong web presence are quickly being left behind. Shortcuts to high rankings are steadily being curbed and eliminated. The search engine user experience improves continuously as the companies' machine learning processes become more sophisticated.

Panda code recognizes content that lacks context. Penguin recognizes artificial link profiles. Some future update may very well allow a computer to distinguish reliably between merely adequate content and truly compelling and brilliant content. That is a death knell for those who rely on gaming the search engines, but it is great news both for users and for those with a diverse, informative, and high-quality web presence.

The path of computer learning capabilities is an exponential curve. Keep your firm's web practice ahead of the curve by investing in a diversified online presence full of high-quality content that fulfills a need. The next time Google's code is updated, you will probably find your site rewarded with a bump in its rankings and traffic while your cost-cutting competitors are scrambling to make up lost ground.

LATEST STORIES